![Increasing Retention With Push, In-App & Email [Part 1]](https://phiture.com/wp-content/uploads/2018/07/Increasing-Retention-With-Push-In-App-Email-Part-1-min.jpeg)

NOTE: This is part one of a three-part series. The second part is ‘Developing a CRM strategy for increasing Retention’ The third part is ‘Implementing the Strategy’

In this article, I’m sharing some practical tips on how to go about improving retention in B2C mobile apps, employing strategic use of CRM as a lever for this improvement.

From my former role leading the Retention team at SoundCloud, I learned first-hand how channels such as Push, Email and, particularly, in-app messages can deliver great impact on user engagement and retention.

After leaving SoundCloud and co-founding Phiture, myself and the team have been exploring the possibility of systemizing the use of these channels and developing a repeatable playbook for user retention that works across different app categories. After a period of heads-down work with clients to deliver on this goal, I felt it was time to share some of the learning and the approach that I’ve seen works well across different types of apps and environments with varying levels of tech and process sophistication.

A Quick Retention Primer

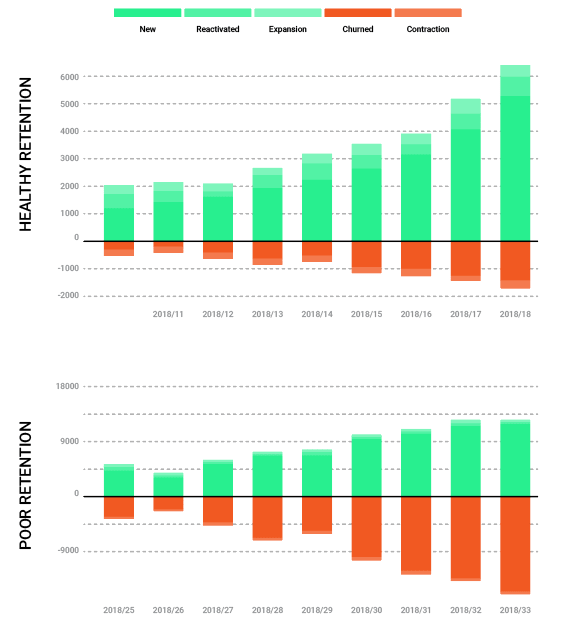

Healthy retention is the cornerstone of growth; an increase in retention will deliver compound returns over the long term and provide a sustainable foundation for acquisition efforts.

A Growth Accounting view demonstrates the value of healthy retention (image adapted from here)

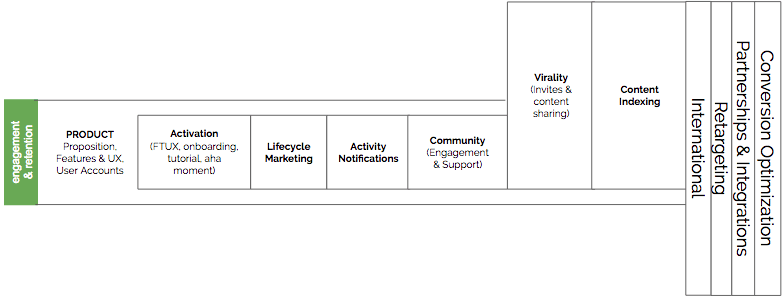

The Engagement + Retention layer of the Mobile Growth Stack makes clear that the key lever is Product; without a product that users find valuable, other activities such as lifecycle marketing and activity notifications will not yield growth in the long run and can even be counter-productive. However, if there is a reasonable level of Product-Market Fit, these activities can be powerful levers for engaging and retaining users in greater numbers.

The Engagement + Retention Layer of the Mobile Growth Stack has Product at its core

User retention is a complex, trailing metric. It’s not an easy number to move, and it can be difficult to understand why it moves when it changes. Literally *everything* affects retention:

- Changes to acquisition methods, including targeting, messaging and channels

- Changes to the product itself (especially changes that are exposed to new user cohorts, such as the onboarding experience)

- Changes to the way the product is positioned and described in the app store, press coverage, company website: all of these surfaces are exposed to potential users who form their first opinions about the product and expectations about the value it can bring to them

- Targeted Lifecycle Marketing Campaigns and Activity Notifications sent to users over channels such as push, in-app messaging and email

- Interactions with Customer Support or a broader user Community

It can’t be overstated that retention begins with awareness and acquisition; if initial expectations — formed in the app store or from press and advertising — are not aligned with the reality users experience in the product, those users will not successfully activate and will churn quickly.

Given that retention is affected by so many factors, it is of critical importance that experiments and activities designed to improve retention are properly instrumented with control groups and long-term holdout groups

Engagement vs Retention

Engagement and retention are inextricably linked. User retention — users returning for another session in the app—ultimately stems from engagement (what the users do in that session); without meaningful engagement, users will not stick around. Understanding the dynamics of user engagement is therefore a key goal when seeking to understand and then to influence retention.

Start with an Audit

It’s really important to get a good understanding of the current situation from technical, analytics, qualitative, and strategic perspectives, before diving into activities to try to improve retention. At Phiture, we structure our retention audit into three distinct phases:

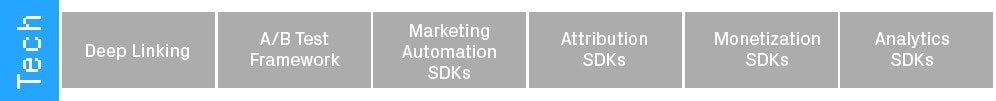

Audit Phase 1: Tech + Data Audit

In this phase, we conduct a review of the enabling tech stack and data integrations:

The objective here is to assess the current technical capability to measure retention and its inputs, as well as the capacity to influence retention in a measurable way with marketing automation over push, in-app and email channels.

A quick evaluation of the current capabilities covers these areas:

- Deep Linking: are there deep links implemented that would enable messaging campaigns to take users to every screen and feature within the app?

- A/B Test Framework: Is there a solution in place to do native A/B testing of product and UX changes?

- Attribution SDK: are we able to attribute app installs to traffic sources? (This is important for analyzing the impact of acquisition efforts on retention)

- Analytics: are users and user behavior being tracked in a way that enables cohort analysis, segmentation, funnel analysis, etc. ?

- Marketing Automation: If there is some kind of CRM/Marketing Automation capability in place (either an in-house system or — more likely — a 3rd party marketing automation solution), some additional things should be reviewed:

- Push and email opt-in rates: are they being measured and how do they benchmark against similar apps?

- Are the Events (things users do) and Attributes (things we know about the user, sometimes referred to as ‘user properties’) that are being sent into the CRM system well documented and consistently named and implemented across platforms (iOS, Android + possibly others such as Web App, Smartwatch or client applications for connected devices such as Amazon Echo or Roku boxes)?

- Are the Events and Attributes covering key actions within the app and suitable for segmentation, targeting, triggering, and personalization of messaging?

It’s likely that some of these checks will turn up gaps in the tech stack, or in the data integrations between some of the tools or data silos, resulting in some homework to do to get things in better shape for measuring and influencing engagement and retention. Things like getting deeplinks into shape or adding extra events, event properties and user attributes can likely run in parallel with the next phase of the audit. If, however, there are significant gaps in analytics tracking, it may be necessary to rectify this situation before progressing further.

Audit Phase 2: Retention + Engagement Data Analysis

This phase is all about deriving insight from the data, with the ultimate aim of understanding the retention dynamics of the app and identifying drivers of retention.

It’s important to have a decent amount of reasonably clean usage data, stretching back as long as possible (at least 6 months and ideally a year or more). This isn’t always possible in a fast-moving startup environment where things get broken regularly and when tracking is sometimes forgotten or implemented patchily. While it’s possible to take a pragmatic approach and work with imperfect data, the insights yielded may be fewer and require further validation.

Natural Usage Frequency

User retention strategy should be based upon a realistic expectation of usage frequency. Only a few apps attain the status of an Instagram-like social network that commands the attention of millions of users throughout the day and developing an understanding of natural usage patterns will help when designing lifecycle campaigns and notifications to reinforce — or in the best case, intensify or grow — this usage.

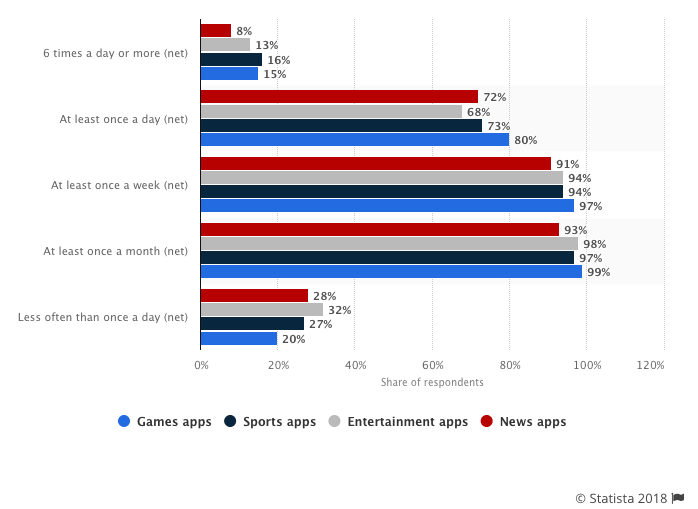

Natural Usage Frequency for selected US mobile app categories. Source

There will likely be different usage frequencies for different levels of engagement; there will likely be identifiable segments (ideally mapped to psychographic personas) that use the app in different ways, at different intensity. Analysis of the usage data, in the best case, yields discernible engagement clusters that deliver two things:

- A segmentation approach that will facilitate targeted communications to users of differing levels of engagement (e.g. casual users, power users, weekend users, etc.)

- A good understanding of the current maximum level of engagement within the app (i.e. ‘how much are power users engaging and with which features?’)

Number 2 gives a decent target to aim for when it comes to increasing engagement. Further analysis of the differences (behavioral, demographic, acquisition source, etc.) of users in these different clusters may yield additional insight that can inform the strategy for increasing engagement and retention.

Identifying Drivers of Retention

Next, it’s useful to look for early events that are predictive of longer-term retention.

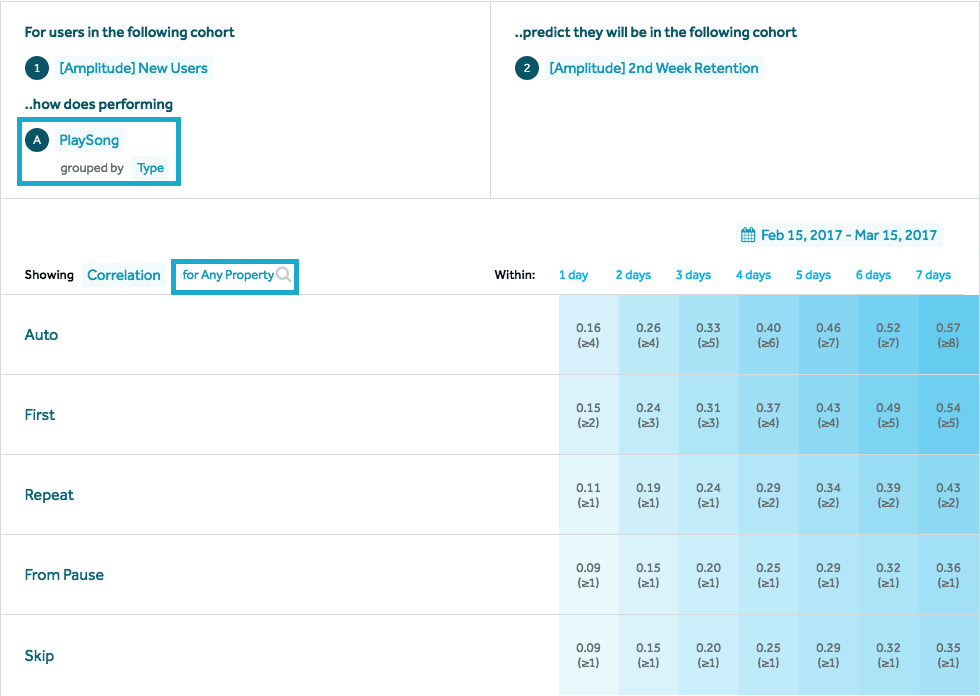

There are a number of analytical methods that can be applied here, but the aim of all of them is to tease out the behavioral differences (i.e. actions the user takes, tracked as events) that differentiate highly-retained users from those that fail to stick with the product. Linear regression is the most common approach to searching for relationships between one variable and those that are correlated to it.

In the best case, a simple linear regression demonstrates a high correlation between one or more actions and longer-term retention of users who do these actions in their first few sessions. In many cases, there won’t be a standout relationship, or the data will show that (surprise, surprise) users who complete almost any action many more times than the average user are strong candidates for being highly retained. In other words, the most engaged users are also the most highly retained. This is normal, so it’s important to look for ‘tipping points’ where users who complete a key action a certain number of times are significantly more likely to be retained than those that complete it fewer times.

Amplitude’s Compass feature (shown above) provides reasonable out-of-the-box regression analysis. (It’s also usually possible to export event data from other analytics setups to do this kind of analysis manually)

There may well not be clear quantitative signals that indicate an obvious path to higher retention. Even if the data does show that users who e.g. add 3x items to their saved items list are higher retained, there are still the questions of whether this is correlation or causation:

Correlation: The users who do action X more times are more likely to be retained simply because they display higher levels of engagement; they were interested enough in the product to do the action more times.

Causation: Users who complete this action experience more value from the product and hence are more likely to continue to use it.

An increasing amount of companies are offering help with predictive analytics, including several who are either building it into their existing analytics products or providing it as a service.

However you perform this analysis, the important thing is to do your best to validate your findings with qualitative research; user interviews, in-app or email surveys and in-depth user testing are all fantastic — and typically underutilized — ways to get a better understanding of what makes users stick around and what turns them off. (Check out this great practical guide to conducting such research by Adeline Lee for more help on this topic).

The insights gleaned from this analysis phase input into the Retention Strategy, which I’ll cover in Part II of this series.

Audit Phase 3: Existing CRM (Lifecycle + Activity Notifications) Review

The final phase of a Retention Audit focuses on the current status of CRM activities. The goal is to get a good grasp of what’s being done in terms of marketing automation, what strategy is driving these activities, and what impact they are driving.

Typically, we see companies using one of the mobile-centric marketing automation tools for push and possibly in-app messaging and often a separate tool for email.

In practical terms, this means:

- Reviewing any existing lifecycle campaigns: what are they designed to achieve? Where do they fit in a broader understanding of the user journey? Is impact being measured properly against control groups and holdouts? Assuming measurement is in place, what is the contribution of each campaign — and the lifecycle program as a whole— toward key metrics?

- As above for any activity notifications (triggered by external events rather than user behavior)

- Checking if there is a consistent naming convention and organization in the dashboard of whatever marketing automation tool is being used?

- What level of personalization is applied? Are there opportunities to personalize further? (Note that personalization is a key way to improve the relevance of messages)

- Are all relevant channels (typically Push, In-App, Email, and In-App notifications feed / inbox) being utilized?

- Are Notification Badges being used effectively? (Check out this great read about the impact of notification badges from John Egan, Growth Engineering Lead at Pinterest). Do these badges lead the user back to somewhere relevant in the app (e.g. a notifications inbox), or simply dump users back into the app?

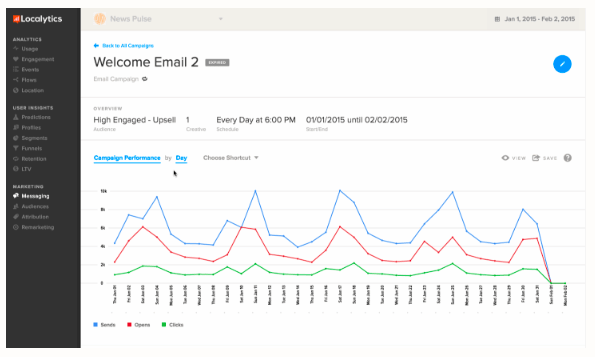

Digging into campaign performance (Source: Localytics)

Finally, and crucially, what’s the process around ideation, creation, testing, iterating, and scaling of lifecycle campaigns and activity notifications? Is there a clear structure and method being applied by the team or individuals involved? Are experiments scientific and hypothesis-driven? Is meaningful iteration occurring? Is an internal playbook being developed that will help onboard new team members, as well as communicate the program goals, rationale and effectiveness throughout the broader organization.

The output of this review is a thorough understanding of the current marketing automation program, the process in place to deliver it and the impact it’s driving. This serves as a fantastic input into the next phase: developing a strategy to improve retention and a prioritized tactical plan to level-up marketing automation efforts in alignment with that strategy.

Coming Next: Creating a Strategic CRM Plan

In the next article in this series, I detail how the results of this first phase are used to develop a comprehensive action plan.

I’m always keen to hear from other growth practitioners; please feel encouraged to leave suggestions or insights from your own experience in the comments section.

Thanks to Regina Leuwer.

Table of Contents