Previously, we’ve explored how surveys and interviews can be used to understand the user’s app download decision-making process and contribute to a sustainable conversion rate uplift. In this article we go further, and show how user research can inform on why people are – or aren’t – converting into installs.

Both surveys and interviews are great tools to understand what people perceive as important. It is important to make a distinction between a user’s perceptions and their actions. For example, surveyed users might not explicitly say that app rank is the most important factor when deciding to download an app, however we see in A/B experiments and through data mining that app rank does matter considerably. The user’s perceptions also determine what expectations or assumptions they have when they arrive on the product page.

Surveys therefore provide a means to quantitatively understand such perceptions. So, how do you get the most out of survey questions?

Well, it’s worth pointing out at this point your research questions will rarely be user-friendly, and the actual survey questions you ask will necessarily be posed in a different way. For example, a central research question might be “What matters most to someone when they’re downloading an app?”

Your survey questions will expand on this research question, and, furthermore, must be constructed in a way that allow the researcher to yield actionable insights, either to inform a strategy, or for further research. We’re going to take a look at a few different ways writing effective survey questions can help answer that central research question: what matters most to someone when they are downloading an app?

Possible Factors to Guide Research

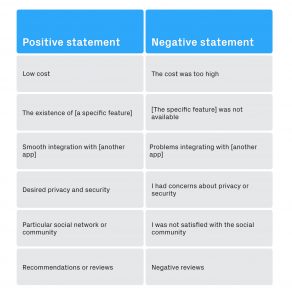

Let’s begin with a list of possible important factors that will guide your research and survey. These may arise from best practices in your industry, personas you have built, or from previous user interviews. For the rest of this article, we will be focusing on the following list of possible factors:

- Low cost

- The existence of a specific feature

- Smooth integration with another app

- Desired privacy and security

- Particular social network or community

- Recommendations or reviews

You can start with this list and refine it to fit the key value proposition of your app. If it seems like such a list is difficult to create, try using a more generative research tool first such as interviews to determine topics for further research. Interviews are excellent for generating ideas, and a few interviews can help improve the specificity and effectiveness of a survey.

A few interviews or pilot/soft-launch survey responses can also help you “debug” your phrasing when it comes to writing your survey proper. Use the terminology of the user as much as possible and not that which the product or marketing team may be accustomed to. This is especially important when it comes to questions around features. Ensure, in a pilot survey or an interview, that the way you’re describing your feature is actually understood by the respondents.

Of course, you could just send a survey with one question (for example, what matters most to you?) and the above list. However, more specific questions will always provide more actionable insights. Additionally, some questions make sense for current users, some for past users, and some for prospective users. Let’s take a look at some examples and dive deeper into what matters most to users.

Competitors: Which apps do you use and which app do you like best?

Here is a set of three questions:

- Which apps do you currently use for [main purpose of your app]?

Respondents should be able to select multiple options from a list of competitors. Remember to add an additional field for ‘Other’.

2. What is your favorite app for [main purpose of your app]?

Respondents should be able to select one option only from the same list of competitors. Remember to add an additional field for ‘Other.’

3. Why is [the favourite app] your favorite?

Respondents should be able to select multiple options from the list of possible factors (see above).

You can analyze the responses as follows:

- Percentage of people who use a competitor app, compared to the percentage of people who consider the competitor to be the favorite. Do this for each of your key competitors.

- Are there different factors associated with different competitors? For each of the competitors, what is the distribution of the different factors?

- How many different apps do people use for a particular activity?

Negative Example: Reasons for not using and stopped using

Here’s a further set of questions:

- Do you know about [your app]?

- If yes, do you currently use [your app]

Response options include:

I have never used this app and I have used this app before but stopped.

If the answer to the first question is yes and the answer to the second question is never used:

3. Why have you never used this app?

If the answer to the first question is yes and the answer to the second question is have used, but then stopped:

4. What prompted you to stop using this app?

Use the negative statements included in the list of possible factors above as the possible responses to question 3. Allow respondents to select multiple options:

The key value of this question is to understand the differences in perception between those who decided not to use the app and those who decided to stop using it.

For something like cost you can see that X% of respondents cited it as a reason for not starting compared to Y% of respondents naming it as the reason for stopping. If X is much bigger (or much smaller) than Y, then this may suggest a communication problem around that particular factor.

So, what matters?

Let’s pull these findings together. Since we used the same set of factors in both sets of questions, we can estimate how many people in the target audience value a particular factor in the scenarios above.

Let’s consider this overview of hypothetical numbers for a social app. In this overview, the most interesting observations are around differences of preference within each factor.

Here is an example interpretation:

Feature & Community: Here we can see that the community aspect of this app is perceived very poorly and leads to the app not being downloaded (a reason for 80% of people in this group). However, the community is not the main reason people stop using the app, but rather because of the feature itself (70%). There may be a technical issue here, as well as a potential issue with how the community is perceived. Looking at the preferences of people using a competitor app, we can see that the community aspect is a much bigger factor than the feature, so addressing this aspect should be prioritized.

More generally, the interpretation can be as follows:

- What factors have the biggest ‘disconnects’ (the biggest difference in the percentage of responses) between the people who don’t start using the app, and those who stop using it?

- Among those factors with the biggest ‘disconnects,’ which matters most to users of the most relevant competitors?

In this case the answer to our question “What matters most to people in downloading this kind of app?” would be: Community, cost, and reviews matter but community is the most important consideration for this app in particular.

The most important principles used in this survey approach were:

- Having a concrete list of important possible factors ideally based on prior insights.

- Asking both positive and negative questions and understanding different aspects of download decision making.

- Considering different groups of people, particularly non-users of the app in question.

Conclusion

Actionable insights come from being able to ask the right questions and interpret the answers in as unbiased a way as possible. Writing effective survey questions in the parlance of your existing users goes a long way to securing open and honest feedback. Be open to that feedback and be ready to bucket the themes that come up into common topics. This will make it easier for your product and ASO teams to action the insights collected.

Before you go

- Check out our piece on how to use qualitative and quantitative user research to better understand your conversion rate.

- If you’re looking for more ways to increase your app conversion rate see our advice on analyzing data and understanding the right metrics to improve.

- Discover the importance of surveys in driving employee engagement.

- Find inspiration in these ironclad questions you can ask to help structure your product page.