The most important decision a growth team needs to make on a regular basis is whether to re-invest in or divest resources from the current initiative(s). In my experience, this comes down to one question, which should be answered for each ongoing growth experiment on a weekly basis: Scale, Iterate, or Kill?

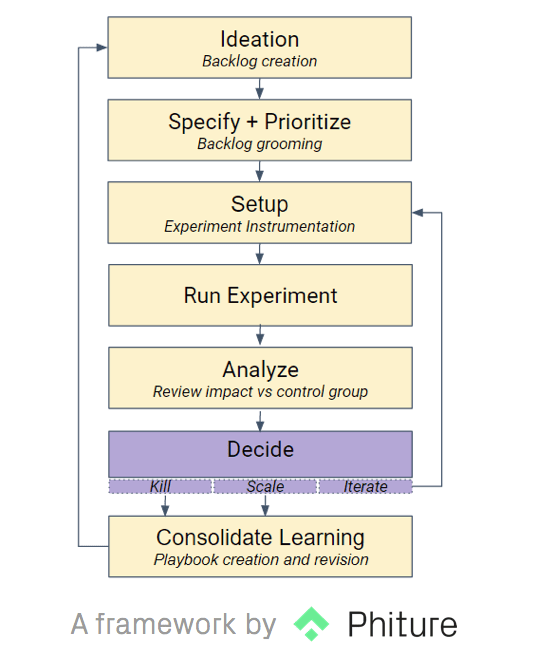

Important activities in the growth experiment cycle include ideation and hypothesis generation, prioritization of new ideas, specifying and instrumenting experiments, analyzing results, and communicating them to the broader team.

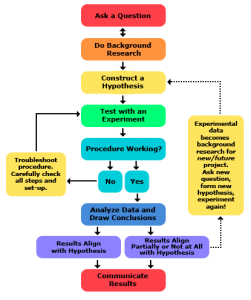

There’s nothing particularly new or innovative about the growth process: it’s essentially the classic scientific experimental process, applied to digital products (see the diagram on the left by Science Buddies).

There’s nothing particularly new or innovative about the growth process: it’s essentially the classic scientific experimental process, applied to digital products (see the diagram on the left by Science Buddies).

Successful growth teams around the world are taking a similar approach to their growth experiments.

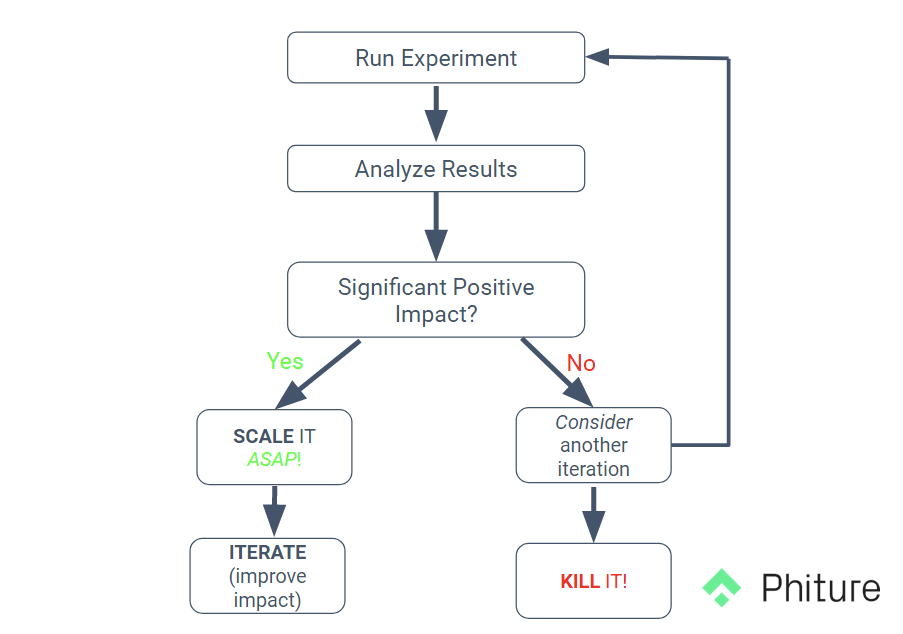

The Scale, Iterate or Kill question comes up at the crucial point in the process that often means the difference between capitalizing on the true potential of a successful experiment on the one hand and failing to capitalize on that impact while simultaneously wasting further sprint cycles iterating on an idea that should be scrapped or shelved.

It’s pretty simple, yet most teams routinely stumble at this step:

- If your experiment succeeded, scale it to your userbase (i.e. turn your experiment result into full impact).

- If your experiment failed, you should most likely kill the idea dead. It’s either a non-starter, or it might need considerable additional work to refactor it into a ‘better’ attempt that could still fail.

- If the results show some positive signs, but not strong or conclusive results, you may want to consider another iteration. You should still balance this against other ideas waiting in the backlog.

In a healthy growth team, the majority of experiments should be killed, some should be scaled and a choice few deserve further immediate iteration. Let’s consider these three possible outcomes of the decision process:

Kill off failed initiatives (quickly!)

In general, the team should err on the side of ‘killing’ unsuccessful experiments.

It’s liberating to let go of ideas, cut losses, move on, and not get stuck in dead-horse-flogging mode; embrace the reality that many ideas simply aren’t winners.

Team dynamics might keep ideas in play when they should be killed. People get attached to bad ideas and ego prevents them from admitting when ideas are not working; they blame the experiment setup, the analysis, or external factors and argue for another iteration. All of those could be valid reasons to stick with the experiment, or they may represent a refusal to accept failure.

To build an organizational immunity to becoming attached to bad ideas, it’s helpful for growth teams to deliberately adopt a default pro-kill stance in the Decide step of the experimental process. Requiring a high burden of proof to decide not to kill an idea that fails to demonstrate impact in the first iteration forces team members to build an empirical case for re-investing in a further iteration.

No Shame in Failing

In a high-functioning growth team, there is no shame in a failed experiment; the majority of experiments will fail and the team is fine with this, as long as momentum is kept high. There should be shame, however, in repeatedly iterating on a bad idea, at the expense of potentially better ones, in the face of mounting evidence that it’s not going to deliver impact.

Killing an idea = stimulus for learning

Upon killing an experiment, consider what has been learned. The idea didn’t work, at least in this formulation, but why? This question is a trigger for further research and analysis. This, in turn, might lead to an improved incarnation of the idea at a later stage. Any experiment that leads to a deeper understanding of user behavior and psychology should not be considered a waste of time.

Celebrate the Kill

For the reasons above, high-functioning growth teams celebrate killing experiments and look forward to testing the next idea. The process of acknowledging the ‘kill’, documenting learning, and then letting go frees up mental space and allows the team to focus on the upcoming experiment, which could be a winner.

In our weekly meetings in the growth team I led at SoundCloud, we congratulated the originator of every idea we killed and high-fived to celebrate making progress and keeping momentum. This celebration often occurred more than once per meeting; we killed a lot of stuff that wasn’t working and grew to love doing it. It became part of our ethos to move fast, test things, scale what worked, and kill what didn’t. If we felt that someone was too attached to their initiative, we’d them out on it; occasionally this happened to me. It was a safe space to do this and led to the team coalescing around a healthy, ego-less, and rational approach to our work.

If it works, scale it right away

Most experiments are conducted on less than 100% of the target audience. Usually, a test group (sometimes referred to as the ‘treatment group’) — a proportion of the overall userbase — is exposed to the experiment — such as a product change, a new feature, or a messaging campaign — while a control group receive the ‘regular’ (baseline product) experience without the experimental changes. The rest of the user population is either experiencing the baseline or are part of other experimental groups.

What we often see while working with our clients at Phiture is teams that get stuck in ‘test mode’: they run experiments, analyze the results, and generate learnings. They then test some new ideas and learn from those too. Surprisingly often, teams fail to capitalize on successful experiments by scaling them from Test to Production.

For example, a growth team sees an increase of 5% in signups after testing a change to their registration flow. The result is statistically significant and has a 99% confidence interval.

This is a strong result and should be rolled out to the entire userbase, ASAP!

Bank the wins before iterating for higher impact

Is it the best result possible? Almost certainly not; it may be possible to reach a 10% increase, a 50% increase, or even a 200% increase with further iteration, or with a radically different approach. But it’s a significant improvement on the baseline. By all means: continue to iterate on the registration flow, but ‘bank’ the 5% uplift by rolling it out to the entire userbase; more users will complete the registration process while ongoing iteration and ideation on the registration flow happens in a subsequent growth sprint. Over time, small gains like these add up like compound interest, but only if they are scaled to production (banked), as opposed to being left as test results.

Validate learning

Scaling validates learning and re-confirms (or falsifies) the experimental result, with a larger sample and over a longer time period. This can lead to further insight into user behavior and could lead to a change in direction and a deeper understanding of how users interact with the product.

Rollbacks won’t result in deaths

Finally, it should be kept in mind that rollbacks, while not desirable, are entirely possible, should scaling turn out to have been a mistake. Unlike medical experiments, growth experiments with mobile apps rarely have fatal consequences; if a winning experiment is scaled to production and introduces unintended side-effects or under-performs versus expectations, the situation is fixable.

(Maybe) Iterate when things are inconclusive

Most experiments won’t yield a positive, statistically significant, result. If an experiment fails to outperform the control group significantly or derives lower impact, it’s probably because:

- The idea lacks the potential to drive positive impact (put simply: the idea is not a winner)

- The idea has potential, but the implementation was poor (poorly constructed hypothesis, poor UX of the experiment, poor wording of copy in a message, etc.)

Not all ideas will be winners; most won’t. How should a team decide whether to iterate on an experimental initiative that delivered a mediocre or bad result, or move on to the next idea in the backlog?

If this were an easy decision, growth would be a much simpler exercise. In an ideal world, the team develops experience in making these judgment calls over time, but in any case, the decision on whether to iterate should be taken in the context of the rationale behind the experiment as well as the other ideas in the backlog.

Was the hypothesis formed from robust qualitative research, quantitative behavioral or market data, or from hunch or ‘gut feel’?

If there is strong data behind it, with logical reasoning that the experiment should have been impactful, then perhaps it’s worth another iteration, trying to test the hypothesis again with a ‘better’ experiment, or a slightly-revised hypothesis.

If the hypothesis was based on intuition, there’s a weaker case for continuing to invest in it, though psychologically it might be hard for the originator of the idea to accept this.

What other ideas are in the backlog?

If there are ideas that the team believes have a better chance of success than another iteration of the current experiment, these ideas should probably be tested first, before returning to another iteration. The team can always return for further iterations on inconclusive experiments when the backlog contains fewer new promising ideas.

Was there significant set-up time and/or resource coordination required to implement the experiment?

If there are specialist resources, outsourced partners, or technology that were brought in especially to test this particular hypothesis, such that shelving the experiment and re-visiting it at a later time would incur a double-hit in terms of coordination and resourcing, this could be an argument for iterating further. Long set-up times between experiments or iterations are a killer to team momentum and should be avoided if possible.

Getting good at the Decide step should be a key goal for growth teams; it doesn’t matter how good the results are if the impact is not scaled.

To quote Jeff Bezos: “To invent you have to experiment, and if you know in advance that it’s going to work, it’s not an experiment.”

Following the scale, iterate, kill process rigorously enables growth teams to move faster and increase the number of ideas they can test, which is a key goal of a strong growth setup. Actively celebrating Kills increases momentum and helps to avoid a middle-of-the-road mentality where only mediocre ideas are tested. Scaling wins to production maximizes impact as the team moves forward.

It’s also a lot more fun once you learn to let your killer instinct run free 😉

Table of Contents